RAM, or random-access memory, is an essential component of any computer system because it stores a large amount of data that the CPU can access quickly and efficiently. This helps to prevent any hiccups in the system’s operation.

These days, DDR4 is the norm for system memory, which is installed in the motherboard’s DIMM slots separately from the CPU. But the GPU’s memory chips are on the PCB, and there are a few more flavors of RAM to be found on the various graphics cards of 2022.

Bạn đang xem: Gddr5 Vs Gddr5x Vs Hbm Vs Hbm2 Vs Gddr6 Update 07/2025

Quickly, those are GDDR5, GDDR5X, GDDR6, HBM, and HBM 2. So, what makes them different, and should you take that into account when selecting the best GPU for your needs?

Find out by reading on!

Random Access Memory – RAM

In a nutshell, RAMs have been around since 1947. It has come a long way since then. What, exactly, is random-access memory, then?

RAM is not the same as other forms of storage media like hard drives or optical discs. RAM allows for simultaneous read/write access to data regardless of its physical location, allowing for data to be updated at any time.

Depending on your needs, RAM may or may not be your top choice when it comes to memory. In general, RAM data is used between the cache memory and the hard drive. Since cache memory is located directly on the CPU chip and the cost of data transfer is lower, the CPU will use it for the simplest operations first.

When the cache, even at today’s 256 MB, is unable to service the CPU, the CPU will ping the RAM and eat the transfer cost. Using hard drive memory to aid in this process is possible, though it is extreme and usually does not improve performance.

Video RAM – VRAM

VRAM is a variant of DRAM (dynamic random access memory) designed for very specific purposes. Similarly to how RAM is used to feed information into the central processing unit, VRAM is meant to aid the GPU’s memory needs.

Information like textures, frame buffers, shadow maps, bump maps, and lighting are all stored in VRAM to aid the graphics chip. The most obvious factor in the necessary amount of VRAM is the display resolution, but there are many others.

The frame buffer needs to be able to store images that are around 8 MB in size in order to support Full HD gaming. If you’re using 4K resolution for your games, that jumps up to a whopping 33.2 MB. This exemplifies not only why modern GPUs use more and more VRAM with each iteration, but also why developers experiment with different types of VRAM to get the best results.

Anti-aliasing (AA) is something else that can have a negative impact on performance. In order to minimize the visual differences between each iteration, it is necessary to render the image multiple times. Visually, AA can make a huge difference, but it may cause a drop in frame rate.

CrossFire and SLI allow for multiple GPUs to work together, but if you want to use all of their memory at once, you’ll need to do some math. You will have the same amount of memory as if you were using a single card because the memory will be cloned across the connected cards.

Keep in mind that your specific requirements will determine the minimum and maximum amounts of VRAM you should use. However, having twice as much VRAM as the game you’re playing requires won’t help. However, if you only have 512 MB of VRAM, performance will suffer dramatically.

The time has come to investigate the various VRAM options and weigh their benefits and drawbacks.

GDDR

The first is GDDR SDRAM, which is used in the vast majority of modern GPUs due to its superior performance. GDDR SDRAM is an abbreviation for Graphics Double Data Rate Synchronous Dynamic Random-Access Memory. So, it’s a form of DDR SDRAM (just like DDR4), but it’s optimized for handling graphical tasks in conjunction with a GPU.

The original GDDR has gone through several revisions since its inception. DDR SGRAM (synchronous graphics RAM) was the original name, but it was shortened to GDDR (Graphics Data Rate) for the subsequent generations. This includes GDDR2, GDDR3, GDDR4, GDDR5, GDDR5X, and GDDR6.

It’s the last three that we’ve mentioned in the introduction to this article, so let’s compare and contrast them.

First, there’s GDDR5, which has been the standard for graphics RAM since around 2010 (when mainstream GPUs started to adopt it).

The next generation of memory, GDDR5X, was essentially a speed boost for GDDR5 itself. Although it was introduced in 2016, very few Pascal-based GPUs from Nvidia actually used it.

GDDR6 is the most recent technology, and it’s already found in most of Nvidia’s Turing lineup (GTX 16 and RTX 20 series) and AMD’s RDNA GPUs (RX 5000 series).

The key distinctions between these three forms of RAM center on their respective speeds and power consumption. You probably guessed correctly that the newer technologies are quicker and use less energy. The data transfer rates of GDDR5 are 40-64 GB/s, those of GDDR5X are 80-112 GB/s, and those of GDDR6 are 112-128 GB/s, respectively.

How significant is this, exactly, in terms of games?

The video above shows that the differences in performance between the GDDR5 and GDDR6 versions of the GTX 1650 are minimal at best, amounting to only a handful of FPS in most games.

However, the GPU’s processing power will be far more consequential than the RAM type.

Since GDDR6 memory is included in nearly all modern GPUs, you probably won’t ever have to decide between GDDR5, GDDR5X, and GDDR6.

Only if you’re considering an older GPU model should you do so; in that case, you should always check some benchmarks to see how its performance stacks up against that of more recent, similarly priced graphics cards.

HBM

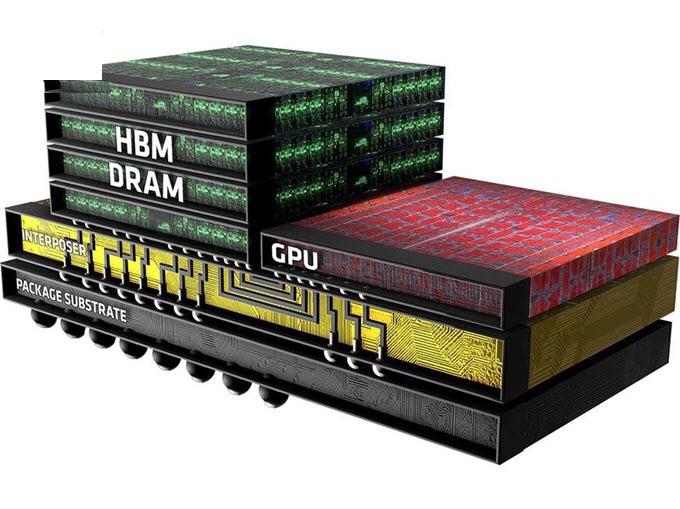

The name High Bandwidth Memory (HBM) gives away what this RAM type has to offer in comparison to GDDR RAM: higher bandwidth. HBM memory is superior to GDDR in terms of PCB footprint, power consumption, and data transfer rate. This is made possible by “stacking” multiple DRAM dies (up to eight in total) on top of each other on the PCB.

HBM memory’s memory bus, which begins at 1024 bits per stack, is the first thing you might notice. However, the memory bus will be wider the more stacks there are, and most HBM-equipped GPUs that have been released so far have either a 2048-bit or a 4096-bit memory bus.

This makes the memory bus on most GDDR configurations seem laughably puny in comparison, as the vast majority of currently available GDDR-equipped GPUs only support a memory bus size of 128 to 392 bits.

And then there’s the bandwidth. HBM is a new technology that has not yet been implemented in any GPUs, but it offers significantly more bandwidth than GDDR, starting at 128 GB/s for HBM, 256 GB/s for HBM2, and a whopping 460 GB/s for HBM2E.

Actual GPU implementations of HBM can provide anywhere from 307 GB/s to a whopping 1024 GB/s, with the latter being found in high-end graphics solutions like the Radeon VII and other workstation-oriented graphics cards.

However, the more pressing issue is how much of an impact HBM actually has in video games.

The video up top features a side-by-side in-game performance evaluation of three of Nvidia’s most recent flagship Titan GPUs: the GDDR5X-equipped Titan Xp, the HBM2-equipped Titan V, and the GDDR6-equipped Titan RTX.

Xem thêm : Best Wireless Router Under 100 Update 07/2025

Tests show that the Titan RTX outperforms the Titan V and the Titan Xp by a margin of 5-10 frames per second (FPS) across the board. However, improvements in GPU architecture, rather than the graphics memory itself, account for most of the performance differences.

But if HBM2 has such a large amount of bandwidth, why isn’t it having a greater effect?

This is because the vast majority of 2022 video games will not take advantage of this technology to its full potential (in terms of either bandwidth requirements or optimization). GPUs with built-in HBM memory are typically designed for use in workstations and memory-intensive software, rather than games.

It’s easy to see why most modern gaming GPUs use GDDR memory instead of HBM, considering how expensive it is to produce and the limited benefits it currently offers in terms of gaming.

GDDR5

While the oldest memory technology we’ll examine, flash memory is far from hopeless. It’s no longer the best option available, but it’s far from obsolete.

Graphics double data rate type five synchronous dynamic random-access memory is its full name. Initially, this lengthy abbreviation may seem difficult to decipher, but we will do so below.

The information regarding the doubled data rate for graphics should be fairly self-explanatory. DDR simply refers to the fact that data is being transferred on a bus on the rising and falling edges of the clock signal, in case that wasn’t clear. Compared to the previous standard SDR (Single Data Transfer), the performance of DDR (Dual Data Transfer) is doubled because the data transfer is available twice in a single clock cycle.

The “DDR” in the name indicates a high-bandwidth interface, making it possible to process the specialized data required for graphical calculations much more quickly.

Even though “GDDR5” suggests “improvement,” it actually relies on DDR4 as its foundation. While there are some differences, both types of memory serve specific purposes and perform those functions well.

In this context, “SDRAM” means synchronous dynamic random-access memory. Since we’ve already covered RAM’s definition, let’s shift our attention to the quantifiers.

It’s simple to dismiss these concepts as generic marketing jargon, but there’s actually more to it than that.

When discussing random-access memory (RAM), the term “dynamic” refers to the fact that each individual bit of data is stored in its own transistor and capacitor within a single memory cell. DRAM is a volatile form of memory because of its limited charge storage capacity; if the device loses power, all stored information is erased.

Memory refreshing, a process that periodically overwrites existing data with itself, returns the charge to the capacitor and is handled by an external circuit in DRAM to counteract this. The SDRAM memory’s clock is kept in time with the processor it supports thanks to the “synchronous” designation. The processor’s potential to carry out instructions is thereby enhanced.

How effective is GDDR5, then?

This innovation in technology was a game-changer. There have been significant developments, however, since the early 2010s.

It’s worth noting that GDDR5 wasn’t introduced until 2007, and that mass production didn’t start until early 2008. It wasn’t until GDDR5X came out that it was surpassed. This means that GDDR5 was the dominant memory technology for almost a decade, which is an absolute first in the history of computing.

One reason for this is that GDDR5’s memory capacities grew over time, from 512 MB all the way up to 8 GB. Though it didn’t technically occur, this was seen as a generational gap by some.

Because they were designed to handle 4K resolutions, the specifics of the latest and greatest GDDR5 version are not to be trifled with. First and foremost, each pin can transfer 8 Gb/s (that’s gigabit, which, by doing some quick math, is equal to 1 gigabyte). In comparison to the previous norm of 3.6 Gb/s, this is a significant increase.

When multiplying the 8 Gb/s per pin by the 170 pins and the 32 bits per cycle, the total bandwidth of a single chip is 256 Gb/s.

Even though GDDR5 has been outperformed, it is still used in some graphics cards; however, these cards are typically found in mobile devices.

GDDR5X

Although it was initially dismissed as just another update, this memory type is a direct successor to GDDR5. This misconception quickly faded away, and it is now evident that GDDR5X was just as much of an upgrade from GDDR5 as GDDR6 was from GDDR5X.

We are able to make this claim because GDDR5X doubled the capabilities of the prior-generation GDDR5. GDDR5X has a chip-to-chip bandwidth of 56 GB/s, while GDDR5 has a bandwidth of 28 GB/s. Its memory clock has been increased by a factor of two, from 7 Gb/s (875 MHz) to 14 Gb/s (1750 MHz), though this is still significantly slower than HBM.

It uses less power than GDDR5, which requires 1.5 V, thanks to its reduced requirement of 1.3 V.

GDDR5X isn’t as fast as GDDR6, as we’ve already established, and its name gives it away. There are still some excellent graphics cards that use GDDR5X, but you should probably go with GDDR6 or even HBM2.

HBM2

HBM2 is the successor to HBM, just as GDDR5X is to GDDR5. In the same way that GDDR5X doubled the performance of GDDR5, HBM2 doubled the performance of HBM.

HBM2E, despite its name, is not equivalent to HBM2 in the same way that GDDR5X is to GDDR5. In this section, we will examine HBM2 as well as HBM2E.

HBM2’s maximum capacity, at 8 GB, and its maximum transfer rate, at 2 Gb/s, both double that of HBM, which was released in 2016. There was also a doubling of bandwidth, from 128 GB/s to 256 GB/s.

While these figures were correct at the time of publication, much has changed with HBM2E since then.

In addition to the previous 207 GB/s of bandwidth, the current transfer rate is 2.4 Gb/s. Because of these incremental improvements, HBM2E is appropriately named HBM2E and not HBM3. What was truly remarkable, though, was the maximum capacity increase from 8 GB to 24 GB, a feat rarely accomplished even by high-end GDDR6 cards.

HBM is still in its infancy, and HBM2 is even younger; as a result, incorporating it into consumer goods is an extremely expensive proposition. GDDR6 is the obvious choice for AMD’s RX 6000 series, and it’s not hard to see why.

In 2017, analysts predicted that purchasing HBM2 would set you back between $150 and $170. Even when compared to the priciest GDDR6 memory, which costs around $90 for 8GB, that is a lot of money.

Even though the price of the HBM2 memory system has likely decreased since 2017. Only time will tell if HBM3 can maintain its current level of performance and cost effectiveness.

GDDR6

Even though this GDDR update wasn’t as anticipated as GDDR5X, it has been met with a warmer reception so far. Despite the fact that the background on generational leaps has already been covered, it is still helpful to compare the numbers.

GDDR5’s maximum data rate was 8 Gb/s, its maximum bandwidth was 336.5 GB/s, and its maximum capacity was 12 GB. The NVIDIA GTX Titan X is responsible for these results.

Xem thêm : Tony Hawks Pro Skater 1 2 Tips Tricks For Beginners Update 07/2025

The GTX 1080 uses GDDR5X memory, which has a peak data rate of 12 Gb/s, a bandwidth of 484 GB/s, and a total of 11 GB capacity.

At long last, the market’s current darling, the RTX 2080 Ti, features GDDR6 memory. It has 11GB of storage and a data transfer rate of 16Gb/s. The staggering 616 GB/s bandwidth is by far the most significant enhancement.

Obviously, these are designed with the average consumer in mind. The 1 teraflop/second mark is quickly being approached by professional graphics processing units.

GDDR6X

The next-generation of GPUs from both AMD and NVIDIA keep making headlines as their release dates get closer and closer. NVIDIA officially started the era of GDDR6X, as had been predicted. The reveal was nearly unexpected because the same naming convention was used from GDDR5 to GDRR6X.

After the 5-year gap between GDDR5X and GDDR5, many predicted that neither AMD nor NVIDIA would rush to release GDDR6X. However, GDDR6 was released only three years later. However, it seems that the GPU war has resumed, and neither side can afford to lose ground at this time.

PAM4 signaling is the primary differentiating factor of GDDR6X technology, doubling the effective bandwidth and enhancing clock efficiency and speed. The potential of PAM4 has been investigated before. Even earlier than that, in 2006, Micron began working on it.

Micron is pleased with NVIDIA’s release of GDDR6X and has praised the companies’ collaboration on the technology’s development. This means that while AMD can only offer GDDR6, NVIDIA’s RTX 3080 and RTX 3090 will use GDDR6X.

What Does The Future Hold for VRAM?

In the HBM community, there is buzz about when HBM3 might be released. These rumors haven’t gained much traction in the past few years, but it’s reasonable to think that a new generation of memory chips is on the horizon. Even though it has since been widely assumed that those HBM3 rumors were actually about HBM2E, it’s nice to be optimistic.

With regards to GDDR7, all we can do is speculate. Technology like GDDR6X is still fairly new, but with shorter and shorter delays between releases, GDDR7 might be announced sooner than we think.

Which VRAM Reigns Supreme?

Given these specifics, either HBM2 or GDDR6 should be considered for the time being. Both forms of video memory have advantages and disadvantages, so picking one will depend on your specific circumstances.

Because of its wider bus, HBM2 is the top pick for intensive workloads like those found in machine learning, AI simulations, 3D modeling, and video editing. In contrast, GDDR6 is where your money should go if you’re serious about gaming.

Both GDDR6 and HBM2 can be used to play games, but different memory is better suited to different tasks.

What Should You Get?

Now that you have a basic understanding of the nuances between the various forms of VRAM, the question becomes: which one should you get? There are five different types mentioned here, so making a comparison between them could be tricky. The key to choosing between them is to consider a number of criteria and then know which VRAM type performs best under those conditions. You can quickly rule out any additional possibilities.

Here are five categories and the winners in each, to give you a hand.

Performance

It goes without saying that efficiency is your top priority. Does using more bandwidth necessarily result in better performance? Two words: yes and no.

If the game requires more bandwidth and your VRAM is limited, then the answer is yes, your performance will suffer. If you upgrade to a VRAM with a higher bandwidth capacity, you will notice a difference. However, if your game only requires a low bandwidth, then the extra VRAM you purchase will be wasted. Besides, the performance hit from upgrading from a GDDR5X to a GDDR6 is relatively small.

Even more so, when it comes to gaming performance, the VRAM is not the most important factor. The GPU design has the greatest impact, so if you’re thinking of increasing your VRAM, double-check the bandwidth your games will need.

Power Consumption

A perfect VRAM would have a lot of storage space, a lot of transfer speed, and use very little power. The current market leader is HBM2. Sure, GDDR6 has low power consumption, but it’s nowhere near as low as HBM2’s. However, GDDR6 consumes more energy than HBM2 but less than GDDR5 and GDDR5X.

Power consumption is something to consider because there is always the chance of overheating. Obviously, a VRAM with a higher power consumption is more likely to overheat. This isn’t the only advantage of HBM2 over GDDR, but it’s a big one if you’re concerned about your VRAM overheating.

Usage

For what purpose are you employing the VRAM? That’s right, you can use it for gaming, but what kinds of games do you plan on playing? Whether or not you need to purchase an HBM2 depends on how often you plan to play virtual reality and augmented reality games. Due to its superior bandwidth and capacity, HBM2 is the only VRAM worth considering for VR and AR applications.

However, if you plan on playing more pedestrian shooters, GDDR6 is the better buy due to its speed and lower price tag. In addition, you don’t waste money on unnecessary unused space. You’re wasting your money even if you get a cheap HBM2 because you’re not using it to its full potential.

Should we conclude that the HBM2 has proven to be useless? Maybe not, but probably not. HBM2 has applications beyond the gaming industry, so it’s not a waste even if you don’t play virtual reality or augmented reality. Actually, HBM memory can be useful for graphics-intensive programs. Those who work in the fields of visual design and video editing will find that HBM2 is the ideal video random access memory (VRAM).

Price

There’s also the matter of cost to think about. The GDDR iterations, and GDDR5 in particular, are the way to go if you’re just starting out with PC building and don’t want to spend too much. The GDDR5 is a popular choice because it’s a mid-range VRAM that doesn’t break the bank.

You can go with GDDR6 or HBM if you don’t mind spending more money on the VRAM. The only catch is that HBM varieties are more difficult to come by. It will also cost you money to go out and look for it.

For Future Use

Just what do you mean by “in the future”? That’s why it’s important to know which VRAMs will still be in stock in the coming years. It goes without saying that GDDR5 is no longer an option now that it has been on the market for some time and that everyone’s focus has shifted to GDDR5X and GDDR6.

At this point in time, not even HBM is immune to becoming obsolete. Why? Since less and less of it is being produced and sold, and no, it’s not because everyone is using it. Choose between GDDR5X, GDDR6, and HBM2 for a reliable and future-proof VRAM.

Considering these factors, HBM and HBM2 emerge as the undisputed frontrunners in terms of data storage and transfer rates. It’s either GDDR5X or GDDR6 when it comes to cost and availability. Now that you know the options, it’s up to you to prioritize them. Some gamers don’t put much thought into choosing a VRAM because their GPU does most of the heavy lifting anyway and more RAM is no guarantee of better performance.

Conclusion

In conclusion, then:

- As of 2022, GDDR6 is the most widely adopted variant of specialized GDDR graphics memory used by modern GPUs. Despite being slightly slower than GDDR5X, it provides more than twice the bandwidth of GDDR5.

- While HBM graphics memory has a much higher bandwidth than GDDR6, its high manufacturing costs and limited benefits in terms of real-time in-game performance do not make it particularly appealing for gaming in 2022. The most recent HBM memories are HBM2 and HBM2E, but HBM2E has not yet been implemented in any GPUs.

However, the type of memory a graphics card has is not the most crucial factor to consider when making your purchase.

As you can see from the article, the GTX 1650 is an excellent test card because it comes in two different memory types (GDDR5 and GDDR6), and a direct comparison of the two shows that the differences between the two are negligible in today’s games.

Besides, as was already mentioned, the only time you’d have to decide between GDDR5, GDDR5X, and GDDR6 is if you were considering using an older GPU for whatever reason, such as looking for a more cost-effective solution that might provide better value for your money. If you want an accurate picture of how something will perform, you should look into some benchmarks and comparisons rather than relying on the specifications listed on paper.

The majority of HBM2-equipped GPUs are designed for workstations and come with very hefty price tags, as do most such GPUs, making it a waste of money if you intend to use it solely for gaming.

The Radeon VII is the only GPU available in 2022 that features HBM2 memory and is suitable for gaming; however, it is only worth purchasing if its price is lower than that of the AMD RX 5700 XT or the Nvidia RTX 2070 Super, both of which offer comparable in-game performance at significantly lower prices.

In any case, if you’re looking for a new GPU, you should examine our picks for the top graphics cards of 2022.

Nguồn: https://gemaga.com

Danh mục: Blog