Answer:

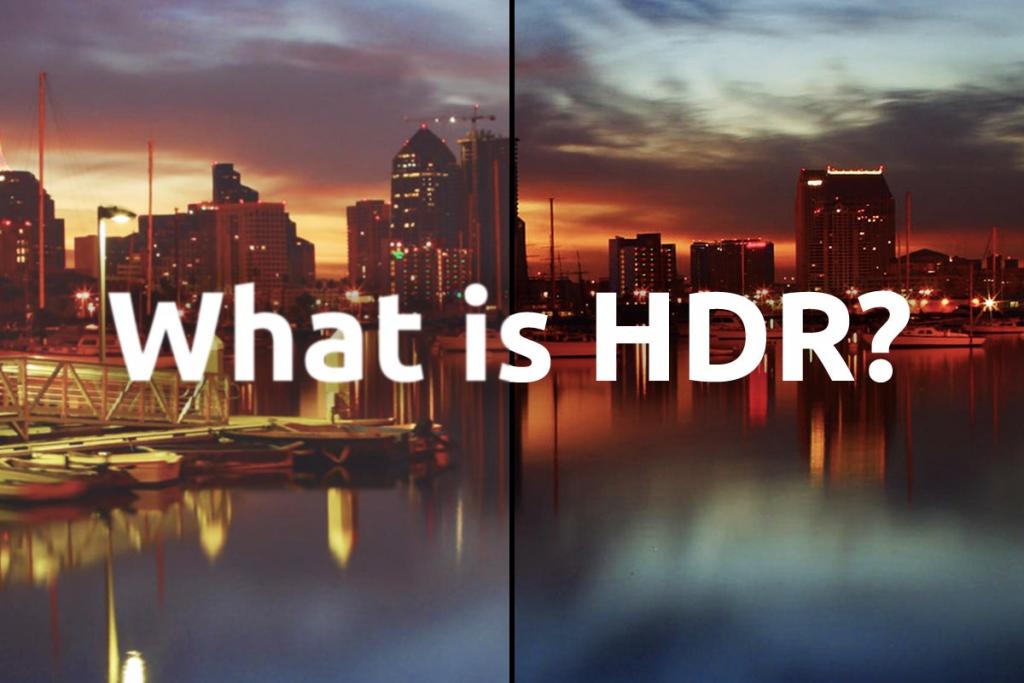

HDR is a technology that greatly improves image quality by expanding the dynamic range of a display, allowing for more detail in both brighter and darker areas of the image. The expanded color palette really brings out the vividness of the various hues.

Bạn đang xem: Hdr10 Vs Hdr10 Plus Vs Dolby Vision Update 07/2025

Even though many players have yet to experience 4K, the technology has already become obsolete in the eyes of the tech industry.

HDR is currently trending.

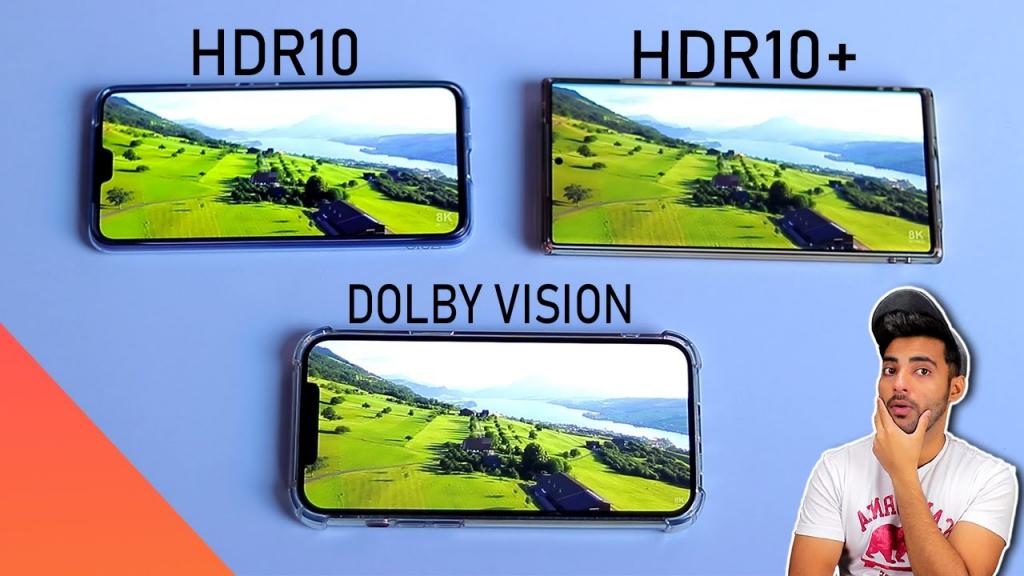

HDR, on the other hand, is much more nebulous and, dare we say, predatory in how it delivers on the promises it sets, while 4K is relatively straightforward (in that all 4K displays feature the same number of pixels). It doesn’t help that there are multiple HDR standards, such as HDR10, HDR10 , DolbyVision, and others.

Here, we’ll explain what high dynamic range (HDR) is and how it’s supposed to function, before breaking down the various encoding standards and detailing how they do work in practice.

What Is HDR?

The idea behind high dynamic range (HDR) is straightforward: to improve the viewing experience by allowing for a wider range of tonalities. True to its name, High Dynamic Range (HDR) is the opposite of Standard Dynamic Range (SDR).

The contrast between an image’s brightest and darkest points is known as its dynamic range. To get the gist of it, think of contrast; there’s more to it than that, but that’s the gist.

A higher dynamic range should allow for a more detailed image in both the darkest shadows and brightest highlights.

It’s also important to remember that HDR-compatible content is a prerequisite for its use. As we’ll see, this is mostly relevant to movies, but it’s also supported by some video games and there’s even an effort to broadcast HDR.

The sales pitch is straightforward: if you think movies look washed out on your standard dynamic range (SDR) TV, upgrade to an HDR TV and enjoy the theater-quality experience in your own home.

HDR10

When put into practice, the premise holds up about as well as a cynic would expect, particularly when utilizing HDR10.

The fact that it is open source means that display manufacturers don’t have to pay any royalties to include it in their products makes this the most widely used HDR encoding standard. The greatest variety of content can be played on it, which is an added bonus. Nonetheless, there is a serious flaw in it.

The metadata in HDR10 is fixed. This means that your television will be given information about the brightest and darkest colors in a film. After selecting this, your TV’s brightness will be optimized for the current movie.

These are the two main issues:

- It’s possible that your TV is incapable of running HDR content. The vast majority of HDR files are stored with the assumption that the brightest colors will be rendered at a luminance of 1000 nits. A maximum brightness of 300 nits is typically all that budget HDR TVs can handle. That means your TV will pick the brightest color in the film and try to adjust the rest of the colors to match that. In light of this, most films will look better in SDR than in HDR on such TVs.

- A major issue, even if your TV supports HDR, is that static metadata typically only employs two reference points throughout an entire film. Suppose you’re watching a horror film that is generally lit very dimly but features a particularly bright explosion. Because of how bright that one explosion is, your TV may show the entire movie brighter than intended.

Dolby Vision

Dolby Vision, which is owned by Dolby and solves both of the problems we just mentioned, is not an open-source encoding standard like HDR10.

Before anything else, HDR10 isn’t limited by any regulations. This standard does not mandate any particular capabilities or capabilities of TVs in order to display HDR content. Hence, you can get HDR10 TVs that actually make HDR content look worse than standard dynamic range. The ability to receive HDR signals is sufficient for the TV to be labeled as HDR-capable, but this has nothing to do with the TV’s actual HDR display quality.

The requirements for TVs to support Dolby, on the other hand, are more stringent. Manufacturers are less likely to include this proprietary technology in an HDR-unready TV because they must pay royalties to use it. Therefore, it’s highly unlikely that you’ll come across a Dolby Vision TV with poor HDR performance.

Another plus is that it makes use of Dynamic Metadata. Rather than receiving two values for an entire movie, the TV will know how bright the brightest and darkest colors are supposed to be on a frame-by-frame or scene-by-scene basis.

Based on this information, it’s clear that Dolby Vision is the better choice over HDR10. Although Dolby Vision TVs can’t display HDR10 content natively, the price tag isn’t the only drawback because these sets can still show HDR10 content in HDR.

HDR10

HDR10’s Static Metadata was always going to be a short-term solution, and that much was clear from the beginning. HDR10 is the result of combining HDR10’s open-source nature with Dynamic Metadata capabilities.

More content is compatible, and it’s less expensive than Dolby Vision.

Xem thêm : How Much Data Does Online Gaming Use Update 07/2025

However, this only addresses the second issue highlighted in the HDR10 segment. It’s still possible to find TVs that claim to support HDR10 but offer inferior HDR capabilities that detract from rather than add to the viewing experience.

Bit Depth

A television’s color bit depth is the number of bits it has to specify which color a pixel should display. If your TV has a higher color depth, you’ll be able to see more colors and less banding in scenes with similar tones of color, like a sunset. The standard dynamic range (SDR) color palette for televisions is 16.7 million colors, while the maximum number of colors possible with a 10-bit color depth is 1.07 billion. 12-bit displays go even further, allowing for a staggering 68.7 billion colors. Technically, both Dolby Vision and HDR10 are able to support content with a bit depth greater than 10, but in practice, this is only possible with Ultra HD Blu-rays with Dolby Vision, and even then, not many of them support a bit depth of 12. HDR10 is limited to a maximum bit depth of 10 when displaying colors.

Dolby and HDR10 are both great options, and both deserve to be recognized as winners. While both HDR10 and Dolby Vision technically support content with bit depths above 10-bit, the vast majority of content will never reach that, and streaming content is always capped at 10-bit color depth.

Peak Brightness

Watching HDR content requires a high peak brightness to make highlights stand out. The brightness of the TV screen should be equal to or greater than the brightness at which HDR content was mastered. If the content has been mastered at 1,000 cd/m2, then it should be displayed at exactly 1,000 cd/m2.

HDR10

- Amounts of 400 to 4000 cd/m2 were used for the mastering process.

- Optical Limitation at 10,000 cd/m2

HDR10

- Audio levels between 1,000 and 4,000 cd/m2 were used for mastering.

- Optical Limitation at 10,000 cd/m2

DOLBY VISION

- Levels of mastering between 1,000 and 4,000 cd/m2

- The maximum allowable intensity is 10,000 cd/m2, according to technical specifications.

At the moment, Dolby Vision and HDR10 content is mastering between 1,000 and 4,000 cd/m2, with the majority of content mastered at around 1,000 cd/m2. HDR10 doesn’t mandate a minimum brightness, but can be mastered at up to 4,000 cd/m2 depending on the source material. Although no display can currently achieve it, all three specifications support images up to a brightness of 10,000 cd/m2. Since both dynamic formats peak at 4,000 cd/m2, there is little to choose between them.

Neither HDR10 nor Dolby Vision can be separated from the rest and declared the victor. There is currently no difference in mastering between HDR10 and Dolby Vision, as both are done between 1,000 and 4,000 cd/m2.

Metadata

Metadata functions as a guidebook for the content, detailing its many features. It’s packaged together with the show or movie and instructs the screen on how to best handle the material.

HDR10

- Unchanging metadata

- All of the content uses the same lighting and color mapping.

HDR10

- Metadata that changes over time

- Modifies the scene-specific lighting and color mapping.

DOLBY VISION

- Metadata in real time

- Adapts white balance and color temperature to each scene.

The three formats use metadata in different ways, which is one way in which they are distinct from one another. HDR10 requires no dynamic metadata at all. By calculating the range of brightness in the brightest scene, the brightness boundaries of the entire film or show are established with static metadata once. The dynamic metadata used by Dolby Vision and HDR10 improves upon this by instructing the TV on how to apply tone-mapping on a scene-by-scene, or even a frame-by-frame, basis. This improves the experience overall by ensuring that dark scenes aren’t overexposed.

If the TV maker doesn’t care about the metadata and does their own tone-mapping when mastering the content, then the HDR format’s metadata is irrelevant and the TV’s performance is what matters.

Dolby Vision and HDR10 are the victor. They are more able to adjust to scenes with wildly varying lighting conditions.

Tone Mapping

Tone mapping reveals how accurately an HDTV reproduces colors it doesn’t natively show. In other words, how does the TV compensate if an HDR movie features a bright red in a scene but it can’t display that particular shade of red? A TV can handle the issue in one of two ways, depending on the map colors it displays. The first is clipping, which occurs when a television’s brightness exceeds a certain threshold, making it impossible to make out finer details or distinguish between colors.

Another common technique is for the TV to remap the range of colors, allowing it to display the necessary bright colors without clipping. In the worst case scenario, the image will still look fine even if it doesn’t show the exact right shade of red. Highlights aren’t clipped like they would be on a TV that uses clipping, but instead experience a more gradual roll-off as colors reach their peak luminance.

There are variations in the way that tone mapping is handled across the three HDR formats. Scene-by-scene tone mapping is possible with dynamic formats like Dolby Vision and HDR10 , and in some cases the content is already tone-mapped at the source, reducing the amount of work the TV has to do. HDR10, on the other hand, doesn’t produce as good of results because it uses static metadata, which means that the tone mapping is consistent throughout the entire film or show.

The victor is High Dynamic Range 10 (HDR10 ) and Dolby Vision. For each individual scene, Dolby Vision and HDR10 use dynamic metadata to adjust the tone mapping.

Backwards Compatibility

Since both HDR10 and Dolby Vision are compatible with static HDR formats found on Ultra HD Blu-rays, you won’t have to worry about which format your older HDR content is in if you upgrade to a new TV. Both Dolby Vision and HDR10 are compatible with older HDR formats, but they do so in different ways. HDR10 enhances HDR10 content with dynamic metadata, so an HDR10 TV will not display HDR10 content with the dynamic metadata included. Dolby Vision adds complexity due to its ability to utilize any static HDR format as a “base layer” and build therefrom. Dolby Vision TVs can read the static metadata alone because it is based on existing standards.

HDR10 must be used as a permanent metadata layer on all Blu-ray discs. If you have a Dolby Vision disc but your TV doesn’t support HDR10 , no problem! The movie will still play in HDR10 on your TV. Streaming content, however, is a different story; a Dolby Vision movie on Netflix, for example, might not have the HDR10 base layer, so even if your TV is HDR10-capable, it will only display in SDR if the Dolby Vision feature isn’t enabled.

TVS THAT DON’T SUPPORT A SPECIFIC FORMAT

For example, if your TV only supports Dolby Vision or HDR10 but not both, you will be restricted to watching only content that was created for one of those formats. Blu-rays can only be viewed in HDR10 if your TV doesn’t support the HDR format the disc was encoded in. Since Samsung TVs don’t support Dolby Vision, you can only watch Dolby Vision Blu-rays in HDR10, and if you stream a Dolby Vision movie that doesn’t have the HDR10 base layer, the content will be in SDR. Content will be displayed in the most effective dynamic format on TVs that support both formats.

Availability

SUPPORTED DEVICES

The new HDR formats have seen a dramatic increase in availability over the past few years. At the very least, HDR10 is supported by all services, and Dolby Vision is available through the vast majority of them. Blu-rays and some streaming services, like Amazon Prime Video, are helping to increase HDR10 ‘s visibility. Where to look for High Dynamic Range (HDR) media.

HDR10 and Dolby Vision are the victor.

SUPPORTED TVS

Many TV models include support for HDR10, and many TV brands include support for at least one of the more advanced formats, but only a select few, including Vizio, Hisense, and TCL, include support for both. Sony and LG TVs in the US support Dolby Vision, while Samsung TVs in the US support HDR10 .

You can’t assume that lower-priced HDR TVs will make full use of their formats’ additional features. Only high-end TVs can take advantage of HDR and display it to its full capabilities, so most people won’t be able to tell the difference.

HDR10 is the winner.

GAMING

| HDR10 | HDR10 | DV | |

| PS4 and PS4 Pro | Yes | No | No |

| PS5 | Yes | No | No |

| Xbox One | Yes | No | Yes |

| Console Video Game System, Xbox, Series X/S | Yes | No | Yes |

| The New Switch by Nintendo | No | No | No |

| PC | Yes | Yes | Yes |

Xem thêm : Metal Gear Game Order Update 07/2025

While high dynamic range (HDR) was developed for movies, its benefits for gaming are clear. Dolby Vision is supported by current-gen consoles like the Xbox One and Xbox Series X. HDR must be enabled by game developers just as it is in movies. Games like Borderlands 3, F1 2021, and Call of Duty: Black Ops Cold War are just a few of the titles available for PC and consoles that support Dolby Vision. PC gamers, particularly those with a Samsung display, can take advantage of HDR10 Gaming, an extension of HDR10 focused on gaming, but consoles will continue to rely on Dolby Vision support. The actual performance varies because HDR isn’t always implemented correctly.

Check out the top-rated 4K HDR televisions for gamers that we’ve chosen.

In a dead heat, both Dolby Vision and HDR10 are declared victorious. It’s true that Dolby Vision games are more common than HDR10 ones, especially on consoles, but HDR10 is making its way into the PC gaming world at a steady pace.

MONITORS

Most of them have HDR support, but that doesn’t mean they’re great for HDR; they lag behind TVs in that department. Low contrast and HDR peak brightness are commonplace on most monitors because only HDR10 is supported, not HDR10 or Dolby Vision, which means you don’t get dynamic metadata. Only on a TV can you get the full effect of high dynamic range (HDR) content.

HDR10 is a victor.

HLG

Finally, we’ll talk about Hybrid Log-Gamma, or HLG for short.

Other HDR encodings have the issue of not being broadcast-friendly. A piece of media would need to be HDR-capable in order to be viewed in HDR. In this respect, DVDs, Blu-rays, and streaming services have simply outpaced cable programming.

Therefore, the BBC and NHK collaborated to develop HLG, a form of HDR that would allow broadcasting that would allow viewers with HDR TVs to see content in HDR while viewers with SDR TVs could view the same broadcast in SDR.

HLG is an inventive way to appease both the SDR and HDR communities, even if its visual quality falls short of that of other HDR methods.

HDR Monitors

Before we wrap up, it’s important to note that HDR monitors adhere to different standards than HDR TVs, so we’ll be devoting some space here to discuss them.

Yep, you nailed it! Those of you who found the previous discussion confusing, brace yourself.

HDR10, HDR10 , and Dolby Vision are not marketing ploys for gaming monitors. What you’ll find are labels like DisplayHDR400, HDR600, HDR1000, etc.

![HDR10 vs Dolby Vision - What's The Difference? [Simple Guide]](https://gemaga.com/wp-content/uploads/2023/03/hdr10-vs-hdr10-plus-vs-dolby-vision-img_64141abd4d5af.jpg)

These displays have been tested and approved by the Video Electronics Standards Association. And finally, the number following HDR actually means something; it indicates the maximum brightness of the screen. Here is a complete rundown of the DisplayHDR Specifications.

In the present day, a minority of HDR gaming monitors lack VESA certification. Some may still be found with HDR, and we are expected to take their word for it. To the contrary, you should not do that. If you make that decision, you will be sorry. The option to use the feature exists, in that it can be toggled on and off, but using it will degrade the visual quality of games.

Unfortunately, even DisplayHDR400 occasionally falls short of providing a satisfactory HDR experience. We believe that if you are going to play games in HDR, you should not settle for anything less than DisplayHDR600.

It’s also important to remember that not every game has HDR support. No game released before 2017 was designed to take advantage of HDR, though some have received it retroactively through patches like The Witcher 3’s or fan-made mods like Doom:1993’s.

There is a directory of games that offer this function at this link.

Conclusion

The transition from standard dynamic range (SDR) to high dynamic range (HDR) may improve the viewing experience more than going from 1080p to 4K.

However, while all 4K monitors display the full number of pixels, not all HDR monitors deliver on their promise of good HDR. One reason for this is the wide variety of encodings used for it.

So, let’s just skim over them:

- Nowadays, HDR10 is totally out of date. There’s a continued presence of it because of its usefulness in advertising.

- However, not all HDR10 -compatible TVs have the hardware to fully realize HDR10 ‘s potential.

- Dolby Vision is the most expensive and most widely adopted format, but it is also the best.

- The goal of the HLG standard is to make HDR compatible with TV broadcasts.

Finally, if you’re in the market for an HDR display, you’ll want to make sure it’s VESA-certified; those looking for the best HDR performance, however, should aim for DisplayHDR 600 certification.

If you’re still confused after reading this, you can always check out our list of the top HDR displays on the market.

Nguồn: https://gemaga.com

Danh mục: Blog